With the boom of horizontal scalability and microservice architecture, the world observed the “container deployment era”. Containers have been in use for more than ten years. Today at least a quarter of leading IT companies use container solutions in a big production, and this number is likely to grow further.

Many market solutions provide container runtimes and orchestration, such as Docker Swarm, Mesos, and others. However, more than half of the users choose Kubernetes as an infrastructure standard. Here we’ll talk about the Kubernetes as a widespread and rapidly-developing solution.

Let’s start with container peculiarities and advantages first.

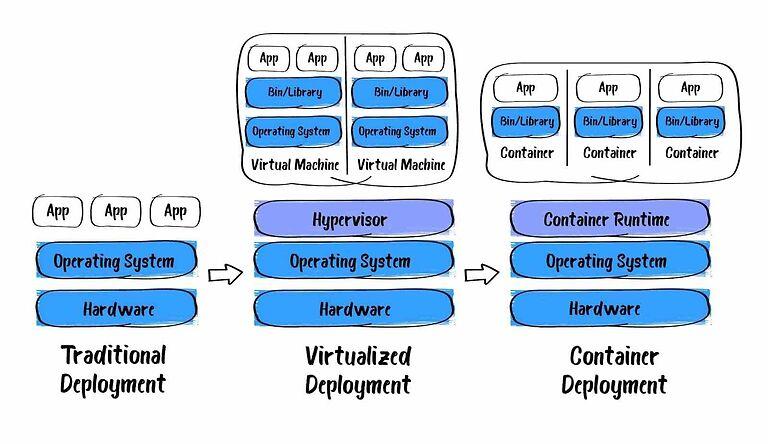

As Docker developers would say: “a container is a standard unit of software that packs an application with all the dependencies it needs to run – application code, runtime, system tools, libraries, and settings.” Compared to Virtual Machines, containers, in essence, are not full-fledged independent OSes, but configured spaces isolated within the same OS that use the host’s Linux kernel to access hardware resources, as well as the host’s memory and storage. Therefore, containers require significantly fewer resources to start and run, which positively impacts the project’s performance and budget.

Lightweight, high-speed of startup and great performance, the delegation of hardware and OS maintenance to the provider (in case of -as-a-Service solutions), are the advantages of containers, which reduce the costs of development and maintenance of applications making solutions based on containers so attractive for businesses.

As for advantages for technical specialists, containers allow them to pack an application along with its runtime environment, thereby solving the problem of dependencies in different environments. For example, differences between software package versions on the developer’s laptop and the subsequent staging or production environments will sooner or later lead to failures. So it will at least require efforts to get with them: to analyze and fix bugs edged into production. Using containers eliminates the “everything-worked-on-my-machine” problem.

Containers also reduce app development time and simplify its management in production due to:

Besides, the absence of binding containers to the hosting platform gives tremendous flexibility when choosing or changing a provider. One can run them without fundamental differences on a personal computer, bare metal servers, or cloud services.

Containers are a great way to bundle and run applications. In a production environment, DevOps engineers need to manage the containers that run the applications and ensure that there is no downtime. To withstand the estimated load, the number of application containers should be certain or not less than the minimum required. So here some mechanism is required which will start new copies of the app containers if the load will become higher or some of them fail for any reason. And this process is easier when such behavior is handled by the system like an “orchestrator” for these containers. All this leads to Kubernetes.

Kubernetes (also known as K8s) is a platform implementing container orchestration in a cluster environment. Previously, it was mainly used for Docker containers as they were highly popular and wide-spread. But, in the latest Kubernetes versions, Docker is listed as deprecated while Container Runtime Interface (CRI) is stated as the native container mechanism.

Kubernetes significantly expands container capabilities, making it easier to manage deployment, network routing, resource consumption, load balancing, and fault tolerance of running applications. This platform is now widely offered by Cloud providers within the -as-a-Service model. At the same time, nothing stops you from installing it on a set of physical or virtual machines whether in the cloud or on-premise.

Kubernetes is a new step in the IT industry that allows simplifying the delivery of apps including the environment where these apps will run. With Kubernetes, it is possible to abstract the description of this environment from the hardware configuration.

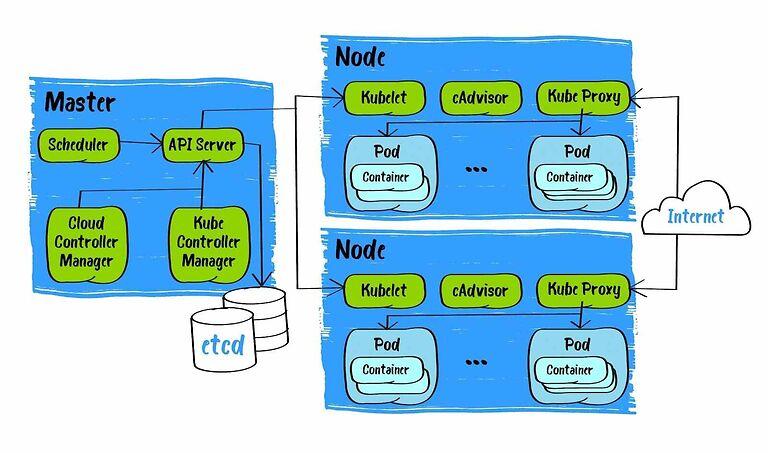

K8s allows us to create a cluster based on several physical or virtual machines. This cluster works as a single environment and consists of two types of nodes:

When you deploy your applications in K8s, you alert the master to launch app containers. The master node schedules containers to run on the working nodes. Each working node communicates with the master. For that Kubernetes has the API, which can be used for direct management using REST calls as well.

To get started with Kubernetes developers can use a lightweight implementation – Minikube. Minikube is a tool available as a cross-platform solution. It creates a simple single-node cluster on a local machine.

.

.

To work with a Kubernetes cluster and to run an app in such an environment, one needs certain instructions to determine the desired state of the app. Such instructions are Kubernetes Manifests that describe what and how you want to run the containerized code in a cluster. Basic manifests could be relatively simple. They are code in YAML/JSON format, so for a developer, it would not be a problem to write a simple manifest, also Manifests can be handled using the GitOps model. But when we are moving from simple manifests in development to production, the problem is that the level of K8s abstraction is so high that one should have a good understanding of how things work under the hood of a cluster.

Kubernetes has a lot of Kinds that describe and manage the behavior of containers inside a cluster. K8s itself is primarily a Container Orchestrator and therefore its parts are containers as well. This fact leads us to 2 main peculiarities:

A node is a component part of the Kubernetes cluster. The Master node (there can be several of those) controls the cluster through the scheduler and controller manager, provides an interface for interacting with users through the API server, and contains the etcd repository with cluster configuration, statuses of its objects, and metadata. The working nodes are intended exclusively for launching and working with containers; for this, two Kubernetes services are installed on it – a network router and a scheduler agent.

A structural object that allows you to divide cluster resources between environments, users, and teams.

This is the smallest unit in Kubernetes – a group of one or more containers that work as one service or application, assembled for joint deployment on a node. It makes sense to group containers of different types in a Pod when they depend on each other and, therefore, must run on the same node to reduce the response time when they interact. An example: containers with a web application and a caching service.

An object that describes and controls the correspondence of the number of Pod replicas running on the cluster. Setting the number of replicas to more than one is required to improve the application’s fault tolerance and scalability. Deployment kind provides more capabilities and is used more often for apps, but in some particular cases, ReplicaSet is enough.

An object that declaratively describes Pods, the number of replicas, and the strategy for their replacement during the update. Deployment allows updating different Pods according to declared rules. This makes the update of services or applications that have been released as smooth and painless as possible.

StatefulSet allows you to describe and save Pods’ state: a unique network address and their disk storage while restarting, thus implementing the stateful application model.

It’s a kind that provides control over what will be launched on each (or several selected) node according to a specified Pod instance. It is used to run cluster storage, log collecting, or node monitoring.

The kinds that launch the specified Pod once (or regularly on a schedule in case of CronJob) and monitor the result of its execution.

A virtual kind for publishing an application as a network service, which also implements load balancing between application Pods.

K8s is not limited only by its basic functionality, but can also be extended with Custom Resource Definitions (CRD). CRD allows us to inject or even “invent” new custom kinds in addition to out-of-the-box ones.

The K8s in essence is a great abstraction tool: there is no need to think deeply about technologies, hardware resources, or their maintenance, etc. Let’s look a bit deeper.

First of all, there is no need to deep dive into hardware characteristics and parameters, despite the project itself not requiring some specific needs. In case of any custom solutions, developers or DevOps engineers indicate these custom adjustments in the manifests or probably even add checks for them.

For example, if your project uses a database and it’s not a cloud database -as-a-Service, you should have one or several nodes a bit more improved than the others (they should have more memory and fast storage) and you should just “tell” via manifests for database pods to be run on exactly these improved nodes.

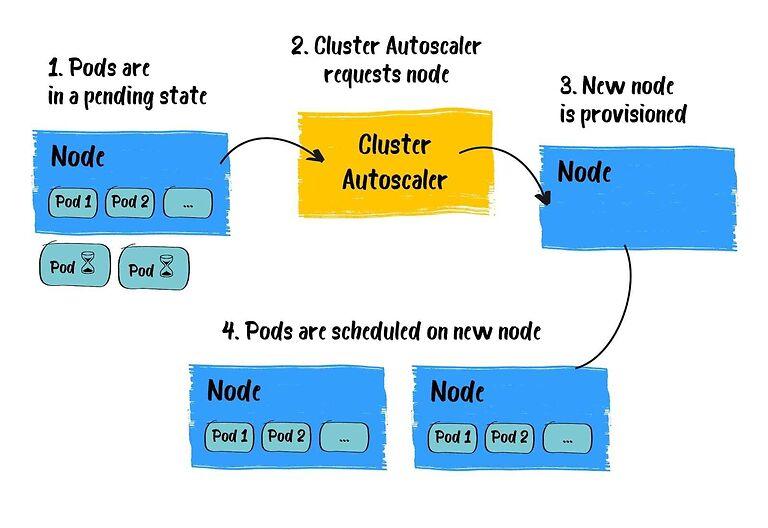

Moreover, you are able to limit all the needed resources (RAM, CPU, etc) yourself. The lack of processing capacities can be compensated by cluster monitoring plus node scaling. If talking about the Cloud, this process can even be automated with a Cluster Autoscaler which scales the target cluster up or down, so it can satisfy any changing demands of the workloads on top.

Autoscaling is a great solution when you don’t know exactly what will be the load and how many resources the app needs to keep such a load. As an example: selling the tickets for a football match, when the request traffic increases within a short period of time. So with Cluster Autoscaler, the cluster expands automatically to cope with the load and returns back after the load decrease.

With the help of namespaces, you can isolate several projects or different stages of one project – non-prod and prod environments – from each other in one cluster. With RBAC you can also configure restriction of access to valuable resources based on the role the user holds. All this provides us with predictable application security.

All we know, that microservice architecture is widespread now. For example, if we have a website divided into modules that operate separately, the failure of one will not affect the whole system. Moreover, there is a possibility to update each separate module with Deployment so that any problems with the update do not engage the whole audience but nobody or a small part of them. And, eventually, if something goes wrong, versioning will help us to roll the update back.

There is no actual OS dependency, you just have an integrated interface you interact via standard commands or API with.

Integration with monitoring systems, CI/CD systems, secret storages, and many more. As for the secrets: if you don’t want secrets to be placed in a cluster, there is an opportunity to integrate K8s with systems storing secrets in order to have them in secret storage, while K8s can access them securely when needed

K8s was created by Google and has been published as open-source. It’s an advantage and disadvantage at the same time as we can face a problem of technical support. In case of an urgent Kubernetes platform issue, there is no opportunity to address it to experts responsible to fix it. So, whoever uses K8s, should understand that for now, just the open-source community supports it.

But business needs support for software platforms. So there were the companies who decided to release Kubernetes as a commercial product. One of them is RedHat – a company that provides supported open-source software products to enterprises. They released their own container orchestration platform based on Kubernetes named OpenShift with enterprise lifecycle support.

It is also worth mentioning Rancher that offers the functionality of commercial K8s distribution in an open-source package. It is quite popular now since it allows to easily create clusters on bare metal with Rancher Kubernetes Engine as well as on hosting providers such as AKS and GKE. Rancher is not a redesign of Kubernetes, but rather a platform that makes deployment and use of it easier even in multi-cluster environments.

Kubernetes interface is implemented as a number of rather complex Manifests or descriptions for each abstracted kind. To understand all the peculiarities and to have the ability to configure and debug such systems, one should have a good understanding of microservice architecture and containerization principles, and, therefore, should have a good knowledge of Linux itself as a basis. So if it’s a small or mid-sized project, it would be a tough start for a developer to work with Kubernetes from the scratch without a fair knowledge of the technologies mentioned.

As for the projects, there are cases when startups hope for the fast start and high load of their systems, and eventually, their expectations fall short, which means there were spent extra efforts and resources for implementing microservices and container orchestration, and as the result – overhead. This point leads us to the question below.

Since Kubernetes has been on the hype for the last several years, it’s a common practice when a client with a small-sized project requests Kubernetes to be used from the very beginning.

But Kubernetes must be used consciously. Working with Kubernetes should be considered when you have a really large number of microservices when there are certain requirements for the level of availability of your project/system, when several teams are working on the application, or when infrastructure is needed to automate deployments and tests more efficiently. At this point, it’s really worth thinking about Kubernetes.

If you are a small company or if you have a monolithic app that will be hard to split into microservices, Kubernetes is definitely not the best decision.

In the case of a microservice solution, Kubernetes is rather a blessing. Gradually, the application becomes overgrown with logic, and there are more and more microservices. And Docker itself is already becoming insufficient. Plus to everything, the clients probably also want some kind of fault tolerance.

Gradually, the company bumps into the ceiling when they need a fresh and highly productive solution. And here, of course, you need a container orchestrator. K8s is a type of software that manages all microservices, looks after them, repairs, transfers within a cluster, builds networks and routes their traffic, and, in general, is such an entry point to the entire infrastructure of the project.

The IT industry is highly flexible and changeable. The software is more and more complex each year. To keep up to date, businesses need to use the newest tools and solutions.

If we look at this issue in terms of deployment, a couple of years ago using containers in production was considered an unreliable solution. However, time does not stand still, the industry evaluated and appreciated the prospects of containers. Now the variety of solutions based on containers is more and more convenient and attractive for businesses.

As for the Kubernetes, if implemented and maintained correctly, it offers developers, DevOps engineers, and business owners great benefits: scalability, workload portability, improved app development/deployment efficiencies, effort, and cost optimization.

Our DevOps team has been working with containers and Kubernetes in particular for a while for far. If you are on the way to digital transformation with K8s, we are ready to help! Contact us for more information.